一、hadoop集群模式

1.单机模式

2.伪分布式模式

3.完全分布式模式

二、hadoop伪分布式

在一台主机模拟多主机。Hadoop启动NameNode、DataNode、JobTracker、TaskTracker这些守护进程都在同一台机器上运行,是相互独立的Java进程。在这种模式下,Hadoop使用的是分布式文件系统,各个作业也是由JobTraker服务,来管理的独立进程。在单机模式之上增加了代码调试功能,允许检查内存使用情况,HDFS输入输出,以及其他的守护进程交互。类似于完全分布式模式,因此,这种模式常用来开发测试Hadoop程序的执行是否正确。需要修改的文件如下:

core-site.xml

hdfs-site.xml

mapred-site.xml

三、必备安装

[root@localhost tmp]# yum install java-1.8.0-openjdk //安装java

[root@localhost tmp]# vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64

[root@localhost tmp]# source /etc/profile

或者使用oraclejdk

[root@xiangys0134-docker-01 tmp]# java -version

java version "1.8.0_152"

Java(TM) SE Runtime Environment (build 1.8.0_152-b16)

Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

四、安装单机hadoop

[root@xiangys0134-docker-01 tmp]# tar -zxvf hadoop-3.2.0.tar.gz -C /usr/local/

[root@xiangys0134-docker-01 tmp]# cd /usr/local/hadoop-3.2.0/

[root@xiangys0134-docker-01 hadoop-3.2.0]# vim etc/hadoop/hadoop-env.sh

添加

JAVA_HOME=/usr/local/java/jdk

五、置Hadoop

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ vim etc/hadoop/core-site.xml

fs.defaultFS

hdfs://localhost:9000

hadoop.tmp.dir

/home/hadoop/grid/disk

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ vim etc/hadoop/hdfs-site.xml

dfs.nameservices

hadoop-cluster

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.namenode.checkpoint.dir

file:///data/hadoop/hdfs/snn

dfs.namenode.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

dfs.http.address

0.0.0.0:50070

六、配置ssh

//配置用户免密登陆

[root@xiangys0134-docker-01 hadoop-3.2.0]# useradd hadoop

[root@xiangys0134-docker-01 hadoop-3.2.0]# passwd hadoop

[root@xiangys0134-docker-01 hadoop-3.2.0]# vim /etc/hosts //指定hosts

添加如下内容:

192.168.10.45 hadoop_master

[root@xiangys0134-docker-01 hadoop-3.2.0]# su hadoop //切换到hadoop下

//配置普通用户hadoop公钥私钥

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ ssh-keygen -t rsa

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ cd ~/.ssh/

[hadoop@xiangys0134-docker-01 .ssh]$ cat id_rsa.pub > authorized_keys

[hadoop@xiangys0134-docker-01 .ssh]$ chmod 600 authorized_keys

[hadoop@xiangys0134-docker-01 .ssh]$ ssh localhost //测试ssh连接成功

七、创建目录

sudo mkdir -p /data/hadoop/hdfs/nn

sudo mkdir -p /data/hadoop/hdfs/dn

sudo mkdir -p /data/hadoop/hdfs/snn

sudo mkdir -p /data/hadoop/yarn/nm

[root@xiangys0134-docker-01 ~]# chown -R hadoop. /data/hadoop

八、HDFS集群进行格式化

//授权hadoop用户具体所有权限

[root@xiangys0134-docker-01 local]# chown -R hadoop. hadoop-3.2.0/

[root@xiangys0134-docker-01 local]# su hadoop

[hadoop@xiangys0134-docker-01 local]$ cd /usr/local/hadoop-3.2.0/

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ bin/hdfs namenode -format

九、启动 Hadoop集群

9.1主节点

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ hadoop-daemon.sh start namenode

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ jps

12934 NameNode

12967 Jps

9.2 启动从节点

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ hadoop-daemon.sh start datanode

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ jps

13077 DataNode

12934 NameNode

13114 Jps

十、YARN配置

YARN由两部分组成ResourceManager守护程序和NodeManager守护程序

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ vim etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ vim etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

十一、启动YARN

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ yarn-daemon.sh start resourcemanager

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ jps

13616 ResourceManager

13077 DataNode

12934 NameNode

13659 Jps

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ yarn-daemon.sh start nodemanager

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ jps

13616 ResourceManager

14129 Jps

13077 DataNode

12934 NameNode

13991 NodeManager

十二、启动作业历史服务器

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ mr-jobhistory-daemon.sh start historyserver

[hadoop@xiangys0134-docker-01 hadoop-3.2.0]$ jps

13616 ResourceManager

14212 JobHistoryServer

13077 DataNode

12934 NameNode

13991 NodeManager

14286 Jps

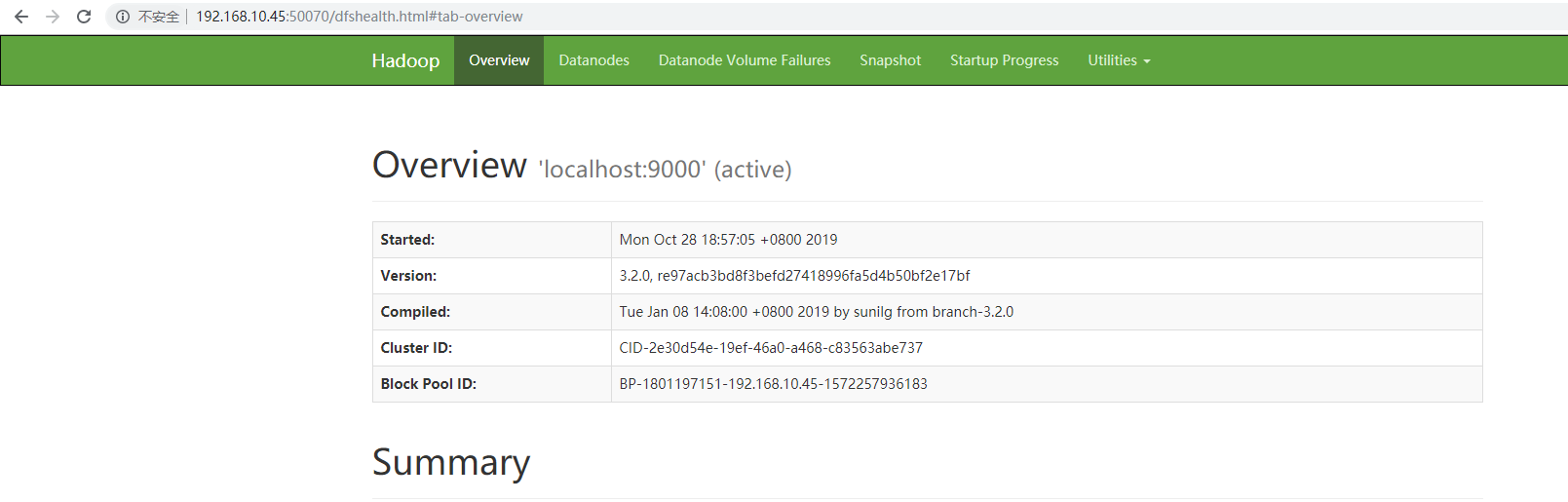

十三、 WEB监控页面

HDFS:http://ip:8088

YARN:http://ip:8088

留言